ADD ON-AOM-CIBF-M1M

| Mã sản phẩm | : ADD ON-AOM-CIBF-M1M |

| Bảo Hành | : |

| Xuất xứ | : |

| Tình trạng | : Hàng mới 100% |

| Giá sản phẩm | : Liên hệ |

| (Giá trên chưa bao gồm thuế VAT) | |

| Tặng kèm phụ kiện. | |

| Số lượng mua | |

| Đánh giá sao | |

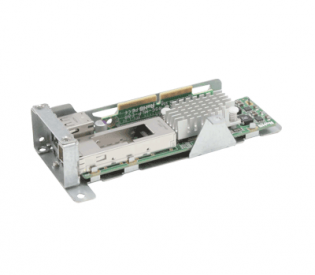

Compact and Powerful InfiniBand FDR Adapter

The ADD ON-AOM-CIBF-M1M is the most compact, yet powerful, InfiniBand adapter in the market. Based on Mellanox® ConnectX-3 with Virtual Protocol Interconnect (VPI), it provides the highest performing and most flexible interconnect solution for servers used in Enterprise Data Centers and High-Performance Computing. The AOM-CIBF-M1M simplifies the system development by serving both InfiniBand and Ethernet fabrics in one hardware design. The ADD ON-AOM-CIBF-M1M comes pre-assembled with a riser card and bracket, and is designed in a small microLP form factor to fit Supermicro 12 node MicroCloud server systems.

• General

– Mellanox® ConnectX-3 FDR controller

– Compact size microLP form factor

– Single QSFP port and dual USB 2.0 ports

– PCI-E 3.0 x8 (8GT/s) interface

• Connectivity

– Interoperable with InfiniBand or 10/40GbE switches

– Passive copper cable with ESD protection

– Powered connectors for optical and active cable support

• InfiniBand

– IBTA Specification 1.2.1 compliant

– Hardware-based congestion control

– 16 million I/O channels

– 256 to 4Kbyte MTU, 1Gbyte messages

• Enhanced InfiniBand

– Hardware-based reliable transport

– Collective operations offloads

– GPU communication acceleration

– Hardware-based reliable multicast

– Extended Reliable Connected transport

– Enhanced Atomic operations

• Ethernet

– IEEE Std 802.3ae 10 Gigabit Ethernet

– IEEE Std 802.3ba 40 Gigabit Ethernet

– IEEE Std 802.3ad Link Aggregation and Failover

– IEEE Std 802.3az Energy Efficient Ethernet

– IEEE Std 802.1Q, .1p VLAN tags and priority

– IEEE Std 802.1Qau Congestion Notification

– IEEE P802.1Qaz D0.2 ETS

– IEEE P802.1Qbb D1.0 Priority-based Flow Control

– Jumbo frame support (9.6KB)

• Hardware-based I/O Virtualization

– Single Root IOV

– Address translation and protection

– Dedicated adapter resources

– Multiple queues per virtual machine

– Enhanced QoS for vNICs

– VMware NetQueue support

• Manageability Features

– Additional CPU Offloads

– RDMA over Converged Ethernet

– TCP/UDP/IP stateless offload

– Intelligent interrupt coalescence

• Flexboot™ Technology

– Remote boot over InfiniBand

– Remote boot over Ethernet

– Remote boot over iSCSI

• Protocol Support

– Open MPI, OSU MVAPICH, Intel MPI, MS

– MPI, Platform MPI

– TCP/UDP, EoIB, IPoIB, SDP, RDS

– SRP, iSER, NFS RDMA

– uDAPL

• Operating Systems/Distributions

– Novell SLES, Red Hat Enterprise Linux (RHEL),

and other Linux distributions

– Microsoft Windows Server 2008/CCS 2003, HPC Server 2008

– OpenFabrics Enterprise Distribution (OFED)

– OpenFabrics Windows Distribution (WinOF)

– VMware ESX Server 3.5, vSphere 4.0/4.1

• Operating Conditions

– Operating temperature: 0°C to 55°C (32°F to 131°F)

• Physical Dimensions (L x W x D)

– Card PCB dimensions (without end brackets):

12.8cm (5.04in) x 5.0cm (1.97in) x 2.35cm (0.93in)

| Part Number | AOM-CIBF-M1M |

| Description | MicroLP InfiniBand FDR Adapter |

| Add-on Card | Compact and Powerful InfiniBand FDR Adapter |

| Key Features | • Single QSFP Connector |

| Compliance | RoHS Compliant 6/6, Pb Free |

| Compatibility Matrices | Servers: Supermicro MicroCloud and Twin Server Systems with microLP expansion slot |

| Specification | • General • Ethernet • Protocol Support |

| Qty | 1 |